Result

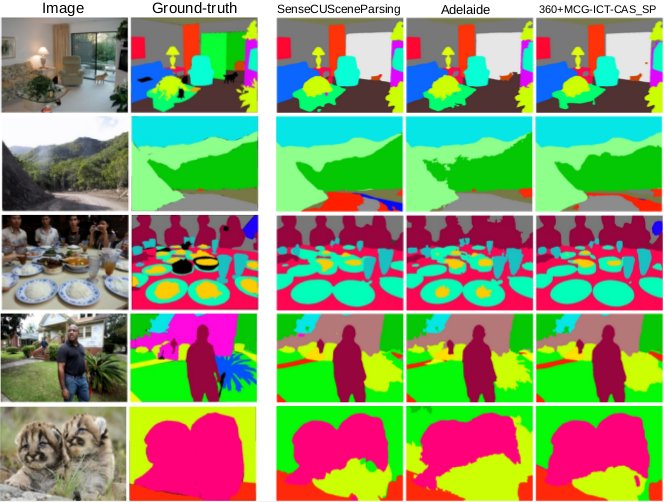

There are totally 75 submissions from 22 teams worldwide. The team of SenseCUSceneParsing won the 1st place with the score 0.57205. The team of Adelaide won the 2nd place with the score 0.56735, while the team of 360+MCG-ICT-CAS_SP won the 3rd place with the score 0.55565. The leaderboard and team information are below. Thanks for all the participants of the 1st Scene Parsing Challenge! Some of the prediction results by the top 3 teams are shown below:

Final Leaderboard:

Legend:

- Yellow background- winner in this task according to this metric & authors are willing to reveal the method

- White background - authors are willing to reveal the method

- Grey background- authors chose not to reveal the method

| Team name | Entry description | Average of mIoU and pixel accuracy |

| SenseCUSceneParsing | ensemble more models on trainval data | 0.57205 |

| SenseCUSceneParsing | dense ensemble model on trainval data | 0.5711 |

| SenseCUSceneParsing | ensemble model on trainval data | 0.5705 |

| SenseCUSceneParsing | ensemble model on train data | 0.5674 |

| Adelaide | Multiple models, multiple scales, refined with CRFs | 0.56735 |

| Adelaide | Multiple models, multiple scales | 0.56615 |

| Adelaide | Single model, multiple scales | 0.5641 |

| Adelaide | Multiple models, single scale | 0.5617 |

| 360+MCG-ICT-CAS_SP | fusing 152, 101, 200 layers front models with global context aggregation, iterative boosting and high resolution training | 0.55565 |

| Adelaide | Single model, single scale | 0.5539 |

| SenseCUSceneParsing | best single model on train data | 0.5538 |

| 360+MCG-ICT-CAS_SP | fusing 152, 101, 200 layers front models with global context aggregation, iterative boosting and high resolution training, some models adding local refinement network before fusion | 0.55335 |

| 360+MCG-ICT-CAS_SP | fusing 152, 101, 200 layers front models with global context aggregation, iterative boosting and high resolution training, some models adding local refinement network before and after fusion | 0.55215 |

| 360+MCG-ICT-CAS_SP | 152 layers front model with global context aggregation, iterative boosting and high resolution training | 0.54675 |

| SegModel | ensemble of 5 models, bilateral filter, 42.7 mIoU on val set | 0.5465 |

| SegModel | ensemble of 5 models,guided filter, 42.5 mIoU on val set | 0.5449 |

| CASIA_IVA | casia_iva_model4:DeepLab, Multi-Label | 0.5433 |

| CASIA_IVA | casia_iva_model3:DeepLab, OA-Seg, Multi-Label | 0.5432 |

| CASIA_IVA | casia_iva_model5:Aug_data,DeepLab, OA-Seg, Multi-Label | 0.5425 |

| NTU-SP | Fusion models from two source models (Train + TrainVal) | 0.53565 |

| NTU-SP | 6 ResNet initialized models (models are trained from TrainVal) | 0.5354 |

| NTU-SP | 8 ResNet initialized models + 2 VGG initialized models (with different bn statistics) | 0.5346 |

| SegModel | ensemble by joint categories and guided filter, 42.7 on val set | 0.53445 |

| NTU-SP | 8 ResNet initialized models + 2 VGG initialized models (models are trained from Train only) | 0.53435 |

| NTU-SP | 8 ResNet initialized models + 2 VGG initialized models (models are trained from TrainVal) | 0.53435 |

| Hikvision | Ensemble models | 0.53355 |

| SegModel | ensemble by joint categories and bilateral filter, 42.8 on val set | 0.5332 |

| ACRV-Adelaide | use DenseCRF | 0.5326 |

| SegModel | single model, 41.3 mIoU on valset | 0.53225 |

| DPAI Vison | different denseCRF parameters of 3 models(B) | 0.53065 |

| Hikvision | Single model | 0.53055 |

| ACRV-Adelaide | an ensemble | 0.53035 |

| 360+MCG-ICT-CAS_SP | baseline,152 layers front model with iterative boosting | 0.52925 |

| CASIA_IVA | casia_iva_model2:DeepLab, OA-Seg | 0.52785 |

| DPAI Vison | different denseCRF parameters of 3 models(C) | 0.52645 |

| DPAI Vison | average ensemble of 3 segmentation models | 0.52575 |

| DPAI Vison | different denseCRF parameters of 3 models(A) | 0.52575 |

| CASIA_IVA | casia_iva_model1:DeepLab | 0.5243 |

| SUXL | scene parsing network 5 | 0.52355 |

| SUXL | scene parsing network | 0.52325 |

| SUXL | scene parsing network 3 | 0.5224 |

| ACRV-Adelaide | a single model | 0.5221 |

| SUXL | scene parsing network 2 | 0.5212 |

| SYSU_HCP-I2_Lab | cascade nets | 0.52085 |

| SYSU_HCP-I2_Lab | DCNN with skipping layers | 0.5136 |

| SYSU_HCP-I2_Lab | DeepLab_CRF | 0.51205 |

| SYSU_HCP-I2_Lab | Pixel normalization networks | 0.5077 |

| SYSU_HCP-I2_Lab | ResNet101 | 0.50715 |

| S-LAB-IIE-CAS | Multi-Scale CNN + Bbox_Refine + FixHole | 0.5066 |

| S-LAB-IIE-CAS | Combined with the results of other models | 0.50625 |

| S-LAB-IIE-CAS | Multi-Scale CNN + Attention | 0.50515 |

| NUS_FCRN | trained with training set and val set | 0.5006 |

| NUS-AIPARSE | model3 | 0.4997 |

| NUS_FCRN | trained with training set only | 0.49885 |

| NUS-AIPARSE | model2 | 0.49855 |

| F205_CV | Model fusion of ResNet101 and DilatedNet, with data augmentation and CRF, fine-tuned from places2 scene classification/parsing 2016 pretrained models. | 0.49805 |

| F205_CV | Model fusion of ResNet101 and FCN, with data augmentation and CRF, fine-tuned from places2 scene classification/parsing 2016 pretrained models. | 0.4933 |

| F205_CV | Model fusion of ResNet101, FCN and DilatedNet, with data augmentation and CRF, fine-tuned from places2 scene classification/parsing 2016 pretrained models. | 0.4899 |

| Faceall-BUPT | 6 models finetuned by pre-trained fcn8s and dilatedNet with 3 different images sizes. | 0.4893 |

| NUS-AIPARSE | model1 | 0.48915 |

| Faceall-BUPT | We use six models finetuned by pre-trained fcn8s and dilatedNet with 3 different images sizes. The pixel-wise accuracy is 76.94% and mean of the class-wise IoU is 0.3552. | 0.48905 |

| F205_CV | Model fusion of ResNet101, FCN and DilatedNet, with data augmentation, fine-tuned from places2 scene classification/parsing 2016 pretrained models. | 0.48425 |

| S-LAB-IIE-CAS | Multi-Scale CNN + Bbox_Refine | 0.4814 |

| Faceall-BUPT | 3 models finetuned by pre-trained fcn8s with 3 different images sizes. | 0.4795 |

| Faceall-BUPT | 3 models finetuned by pre-trained dilatedNet with 3 different images sizes. | 0.4793 |

| F205_CV | Model fusion of FCN and DilatedNet, with data augmentation and CRF, fine-tuned from places2 scene classification/parsing 2016 pretrained models. | 0.47855 |

| S-LAB-IIE-CAS | Multi-Scale CNN | 0.4757 |

| Multiscale-FCN-CRFRNN | Multi-scale CRF-RNN | 0.47025 |

| Faceall-BUPT | one models finetuned by pre-trained dilatedNet with images size 384*384. The pixel-wise accuracy is 75.14% and mean of the class-wise IoU is 0.3291. | 0.46565 |

| Baseline:DilatedNet | 0.45665 | |

| Baseline:FCN-8s | 0.44800 | |

| Deep Cognition Labs | Modified Deeplab Vgg16 with CRF | 0.41605 |

| Baseline:SegNet | 0.40785 | |

| mmap-o | FCN-8s with classification | 0.39335 |

| NuistParsing | SegNet+Smoothing | 0.3608 |

| XKA | SegNet trained on ADE20k +CRF | 0.3603 |

| VikyNet | Fine tuned version of ParseNet | 0.0549 |

| VikyNet | Fine tuned version of ParseNet | 0.0549 |

Team information

| SenseCUSceneParsing | Hengshuang Zhao* (SenseTime, CUHK), Jianping Shi* (SenseTime), Xiaojuan Qi (CUHK), Xiaogang Wang (CUHK), Tong Xiao (CUHK), Jiaya Jia (CUHK) [* equal contribution] | We have employed FCN based semantic segmentation for the scene parsing. We propose a context aware semantic segmentation framework. The additional image level information significantly improves the performance under complex scene in natural distribution. Moreover, we find that deeper pretrained model is better. Our pretrained models include ResNet269, ResNet101 from ImageNet dataset, and ResNet152 from Places2 dataset. Finally, we utilize the deeply supervised structure to assist training the deeper model. Our best single model reach 44.65 mIOU and 81.58 pixel accurcy in validation set.

[1]. Long, Jonathan, Evan Shelhamer, and Trevor Darrell. "Fully convolutional networks for semantic segmentation." CVPR. 2015. [2]. He, Kaiming, et al. "Deep residual learning for image recognition." arXiv:1512.03385, 2015. [3]. Lee, Chen-Yu, et al. "Deeply-Supervised Nets." AISTATS, 2015. |

| Adelaide | Zifeng Wu, University of Adelaide

Chunhua Shen, University of Adelaide Anton van den Hengel, University of Adelaide |

We have trained networks with different newly designed structures. One of them performs as well as the Inception-Residual-v2 network in the classification task. It was further tuned for several epochs using the Places365 dataset, which finally obtained even better results on the validation set in the segmentation task. As for FCNs, we mostly followed the settings in our previous technical reports [1, 2]. The best result was obtained by combining the FCNs initialized using two pre-trained networks.

[1] High-performance Semantic Segmentation Using Very Deep Fully Convolutional Networks. https://arxiv.org/abs/1604.04339 [2] Bridging Category-level and Instance-level Semantic Image Segmentation. https://arxiv.org/abs/1605.06885 |

| 360+MCG-ICT-CAS_SP | Rui Zhang (1,2)

Min Lin (1) Sheng Tang (2) Yu Li (1,2) YunPeng Chen (3) YongDong Zhang (2) JinTao Li (2) YuGang Han (1) ShuiCheng Yan (1,3) (1) Qihoo 360 (2) Multimedia Computing Group,Institute of Computing Technology,Chinese Academy of Sciences (MCG-ICT-CAS), Beijing, China (3) National University of Singapore (NUS) |

Technique Details for the Scene Parsing Task:

There are two core and general contributions for our scene parsing system: 1) Local-refinement-network for object boundary refinement, and 2) Iterative-boosting-network for overall parsing refinement. These two networks collaboratively refine the parsing results from two perspectives, and the details are as below: 1) Local-refinement-network for object boundary refinement. This network takes the original image and the K object probability maps (each for one of the K classes) as inputs, and the output is m*m feature maps indicating how each of the m*m neighbors propagates the probability vector to the center point for local refinement. It works similar to bounding-box-refinement in object detection task in spirit, but here locally refine the object boundary instead of object bounding box. 2) Iterative-boosting-network for overall parsing refinement. This network takes the original image and the K object probability maps (each for one of the K classes) as inputs, and the output is the refined probability maps for all classes. It iterative boosting the parsing results in a global way. Also two other tricks are used as below: 1) Global context aggregation: The scene classification information may potentially provide the global context information for decision as well as capture the co-occurrence relationship between scene and object/stuff in scene. Thus, we add the features from an independent scene classification model trained on ILSVRC 2016 Scene Classification dataset into our scene parsing system as contexts. 2) Multi-scale scheme: Considering the limited amount of training data and the various scales of objects in different training samples, we use multi-scale data argumentation in both training and inference stages. High resolution models are also trained on magnified images to capture details and small objects. |

| SegModel | Falong Shen, Peking Univerisity

Rui Gan, Peking University Gang Zeng, Peking Univerisity |

Abstract

Our models are finetuned from resnet152[1] and follow the methods introduced in [2]. References [1] K He,X Zhang,S Ren,J Sun. Deep Residual Learning for Image Recognition. [2] F Shen,G Zeng. Fast Semantic Image Segmentation with High Order Context and Guided Filtering. |

| CASIA_IVA | Jun Fu,Jing Liu,Xinxin Zhu,Longteng Guo,Zhenwei Shen,Zhiwei Fang,Hanqing Lu | We implement image semantic segmentation based on the fused result of the three deep models: DeepLab[1], OA-Seg[2] and the officially public model in this challenge. DeepLab is trained with the framework of Resnet101, and is further improved with object proposals and multiscale prediction combination. OA-Seg is trained with VGG, in which object proposals and multiscale supervision are considered. We argument training data by multiscale and mirrored variants for the above both models. We additionally employ multi-label annotation for images to refine the segmentation results.

[1]Liang-Chieh Chen et.al, DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs,arXiv:1606.00915,2016 [2]Yuhang Wang et.al, Objectness-aware Semantic Segmentation, Accepted by ACM Multimedia, 2016. |

| NTU-SP | Bing Shuai (Nanyang Technological University)

Xiangfei Kong (Nanyang Technological University) Jason Kuen (Nanyang Technological University) Xingxing Wang (Nanyang Technological University) Jianxiong Yin (Nanyang Technological University) Gang Wang* (Nanyang Technological University) Alex Kot (Nanyang Technological University) |

We train our improved fully convolution networks (IFCN) for the scene parsing task. More specifically, we use the pre-trained Convolution Neural Network (pre-trained from ILSVRC CLS-LOC task) as encoder, and then adds a multi-branch deep convolution network to perform multi-scale context aggregation. Finally, simple deconvolution network (without unpooling layers) is used as the decoder to generate the high-resolution label prediction maps. IFCN subsumes the above three network components. The network (IFCN) is trained with the class weighted loss proposed in [Shuai et al, 2016].

[Shuai et al, 2016] Bing Shuai, Zhen Zuo, Bing Wang, Gang Wang. DAG-Recurrent Neural Network for Scene Labeling |

| Hikvision | Qiaoyong Zhong*, Chao Li, Yingying Zhang(#), Haiming Sun*, Shicai Yang*, Di Xie, Shiliang Pu (* indicates equal contribution)

Hikvision Research Institute (#)ShanghaiTech University, work is done at HRI |

[DET]

Our work on object detection is based on Faster R-CNN. We design and validate the following improvements: * Better network. We find that the identity-mapping variant of ResNet-101 is superior for object detection over the original version. * Better RPN proposals. A novel cascade RPN is proposed to refine proposals' scores and location. A constrained neg/pos anchor ratio further increases proposal recall dramatically. * Pretraining matters. We find that a pretrained global context branch increases mAP by over 3 points. Pretraining on the 1000-class LOC dataset further increases mAP by ~0.5 point. * Training strategies. To attack the imbalance problem, we design a balanced sampling strategy over different classes. With balanced sampling, the provided negative training data can be safely added for training. Other training strategies, like multi-scale training and online hard example mining are also applied. * Testing strategies. During inference, multi-scale testing, horizontal flipping and weighted box voting are applied. The final mAP is 65.1 (single model) and 67 (ensemble of 6 models) on val2. [CLS-LOC] A combination of 3 Inception networks and 3 residual networks is used to make the class prediction. For localization, the same Faster R-CNN configuration described above for DET is applied. The top5 classification error rate is 3.46%, and localization error is 8.8% on the validation set. [Scene] For the scene classification task, by drawing support from our newly-built M40-equipped GPU clusters, we have trained more than 20 models with various architectures, such as VGG, Inception, ResNet and different variants of them in the past two months. Fine-tuning very deep residual networks from pre-trained ImageNet models, like ResNet 101/152/200, seemed not to be as good enough as what we expected. Inception-style networks could get better performance in considerably less training time according to our experiments. Based on this observation, deep Inception-style networks, and not-so-deep residuals networks have been used. Besides, we have made several improvements for training and testing. First, a new data augmentation technique is proposed to better utilize the information of original images. Second, a new learning rate setting is adopted. Third, label shuffling and label smoothing is used to tackle the class imbalance problem. Fourth, some small tricks are used to improve the performance in test phase. Finally we achieved a very good top 5 error rate, which is below 9% on the validation set. [Scene Parsing] We utilize a fully convolutional network transferred from VGG-16 net, with a module, called mixed context network, and a refinement module appended to the end of the net. The mixed context network is constructed by a stack of dilated convolutions and skip connections. The refinement module generates predictions by making use of output of the mixed context network and feature maps from early layers of FCN. The predictions are then fed into a sub-network, which is designed to simulate message-passing process. Compared with baseline, our first major improvement is that, we construct the mixed context network, and find that it provides better features for dealing with stuff, big objects and small objects all at once. The second improvement is that, we propose a memory-efficient sub-network to simulate message-passing process. The proposed system can be trained end-to-end. On validation set, the mean iou of our system is 0.4099 (single model) and 0.4156 (ensemble of 3 models), and the pixel accuracy is 79.80% (single model) and 80.01% (ensemble of 3 models). References [1] Ren, Shaoqing, et al. "Faster R-CNN: Towards real-time object detection with region proposal networks." Advances in neural information processing systems. 2015. [2] Shrivastava, Abhinav, Abhinav Gupta, and Ross Girshick. "Training region-based object detectors with online hard example mining." arXiv preprint arXiv:1604.03540 (2016). [3] He, Kaiming, et al. "Deep residual learning for image recognition." arXiv preprint arXiv:1512.03385 (2015). [4] He, Kaiming, et al. "Identity mappings in deep residual networks." arXiv preprint arXiv:1603.05027 (2016). [5] Ioffe, Sergey, and Christian Szegedy. "Batch normalization: Accelerating deep network training by reducing internal covariate shift." arXiv preprint arXiv:1502.03167 (2015). [6] Szegedy, Christian, et al. "Rethinking the inception architecture for computer vision." arXiv preprint arXiv:1512.00567 (2015). [7] Szegedy, Christian, Sergey Ioffe, and Vincent Vanhoucke. "Inception-v4, inception-resnet and the impact of residual connections on learning." arXiv preprint arXiv:1602.07261 (2016). [8] F. Yu and V. Koltun, "Multi-scale context aggregation by dilated convolutions," in ICLR, 2016. [9] J. Long, E. Shelhamer, and T. Darrell, "Fully convolutional networks for semantic segmentation," in CVPR, 2015. [10] S. Zheng, S. Jayasumana, B. Romera-Paredes, V. Vineet, Z. Su, D. Du, C. Huang, and P. Torr, "Conditional random fields as recurrent neural networks," in ICCV, 2015. [11] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, A. Yuille, "DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs", arXiv:1606.00915, 2016. [12] P. O. Pinheiro, T. Lin, R. Collobert, P. Dollar, "Learning to Refine Object Segments", arXiv:1603.08695, 2016. |

| ACRV-Adelaide | Guosheng Lin;

Chunhua Shen; Anton van den Hengel; Ian Reid; Affiliations: ACRV; University of Adelaide; |

Our method is based on multi-level information fusion. We generate multi-level representation of the input image and develop a number of fusion networks with different architectures.

Our models are initialized from the pre-trained residual nets [1] with 50 and 101 layers. A part of the network design in our system is inspired by the multi-scale network with pyramid pooling which is described in [2] and the FCN network in [3]. Our system achieves good performance on the validation set. The IoU score on the validation set is 40.3 for using a single model, which is clearly better than the reported results of the baseline methods in [4]. Applying DenseCRF [5] slightly improves the result. We are preparing a technical report on our method and it will be available in arXiv soon. References: [1] "Deep Residual Learning for Image Recognition", Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun. CVPR 2016. [2] "Efficient Piecewise Training of Deep Structured Models for Semantic Segmentation", Guosheng Lin, Chunhua Shen, Anton van den Hengel, Ian Reid; CVPR 2016 [3] "Fully convolutional networks for semantic segmentation", J Long, E Shelhamer, T Darrell; CVPR 2015 [4] "Semantic Understanding of Scenes through ADE20K Dataset" B. Zhou, H. Zhao, X. Puig, S. Fidler, A. Barriuso and A. Torralba. arXiv:1608.05442 [5] "Efficient Inference in Fully Connected CRFs with Gaussian Edge Potentials", Philipp Krahenbuhl, Vladlen Koltun; NIPS 2012. |

| DPAI Vison | Object detection: Chris Li, Savion Zhao, Bin Liu, Yuhang He, Lu Yang, Cena Liu

Scene classification: Lu Yang, Yuhang He, Cena Liu, Bin Liu, Bo Yu Scene parsing: Bin Liu, Lu Yang, Yuhang He, Cena Liu, Bo Yu, Chris Li, Xiongwei Xia Object detection from video: Bin Liu, Cena Liu, Savion Zhao, Yuhang He, Chris Li |

Object detection:Our methods is based on faster-rcnn and extra classifier. (1) data processing: data equalization by deleting lots of examples in threee dominating classes (person, dog, and bird); adding extra data for classes with training data less than 1000; (2) COCO pre-train; (3) Iterative bounding box regression + multi-scale (trian/test) + random flip images (train / test) (4) Multimodel ensemble: resnet-101 and inception-v3 (5) Extra classifier with 200 classes which helps to promote recall and refine the detection scores of ultimate boxes.

[1] He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[J]. arXiv preprint arXiv:1512.03385, 2015. [2] Ren S, He K, Girshick R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[C]//Advances in neural information processing systems. 2015: 91-99. Scene classification: We trained the model on Caffe[1]. An ensemble of Inception-V3[2] and Inception-V4[3]. We totally integrated four models. Top1 error on validation is 0.431 and top5 error is 0.129. The single model is modified on Inception-V3[2], the top1 error on validation is 0.434, top5 error is 0.133. [1] Jia, Yangqing and Shelhamer, Evan and Donahue, Jeff and Karayev, Sergey and Long, Jonathan and Girshick, Ross and Guadarrama, Sergio and Darrell, Trevor. Caffe: Convolutional Architecture for Fast Feature Embedding. arXiv preprint arXiv:1408.5093. 2014. [2]C.Szegedy,V.Vanhoucke,S.Ioffe,J.Shlens,andZ.Wojna. Rethinking the inception architecture for computer vision. arXiv preprint arXiv:1512.00567, 2015. [3] C.Szegedy,S.Ioffe,V.Vanhoucke. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. arXiv preprint arXiv:1602.07261, 2016. Scene parsing: We trained 3 models on modified deeplab[1] (inception-v3, resnet-101, resnet-152) and only used the ADEChallengeData2016[2] data. Multi-scale \ image crop \ image fliping \ contrast transformation are used for data augmentation and decseCRF is used as post-processing to refine object boundaries. On validation with combining 3 models, witch achieved 0.3966 mIoU and 0.7924 pixel-accuracy. [1] L. Chen, G. Papandreou, I. K.; Murphy, K.; and Yuille, A. L. 2016. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. In arXiv preprint arXiv:1606.00915. [2] B. Zhou, H. Zhao, X. P. S. F. A. B., and Torralba, A. 2016. Semantic understanding of scenes through the ade20k dataset. In arXiv preprint arXiv:1608.05442. Object detection from video: Our methods is based on faster-rcnn and extra classifier. We train Faster-RCNN based on RES-101 with the provided training data. We also train extra classifier with 30 classes which helps to promote recall and refine the detection scores of ultimate boxes. |

| SUXL | Xu Lin SZ UVI Technology Co., Ltd | The proposed model is a combination of some convolutional neural network framework for scene parsing implemented on Caffe. We initialise ResNet-50 and ResNet-101 [1] trained on ImageNet classification dataset; then train this two networks on Place2 scene classification 2016. With some modification for scene parsing task, we train multiscale dilated network [2] initialised by trained parameter of ResNet-101, and FCN-8x and FCN-16x [3] trained parameter of ResNet-50. Considering additional models provided by scene parsing challenge 2016, we do a combination of these models via post network. The proposed model is also refined by fully connected CRF for semantic segmentation [4].

[1]. K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning for image recognition. In CVPR, 2016. [2].L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. arXiv:1606.00915, 2016 [3].J. Long, E. Shelhamer, and T. Darrell. Fully convolutional networks for semantic segmentation. In Proc. CVPR, 2015. [4].Krahenbuhl, P. and Koltun, V. Efficient inference in fully connected crfs with gaussian edge potentials. In NIPS, 2011. |

| SYSU_HCP-I2_Lab | Liang Lin (Sun Yat-sen University),

Lingbo Liu (Sun Yat-sen University), Guangrun Wang (Sun Yat-sen University), Junfan Lin (Sun Yat-sen University), Ziyang Tang (Sun Yat-sen University), Qixian Zhou (Sun Yat-sen University), Tianshui Chen (Sun Yat-sen University) |

We design our scene parsing model based on DeepLab2-CRF, and improve it from the following two aspects. First, we incorporate deep, semantic information and shallow, appearance information with skipping layers to produce refined, detailed segmentations. Specifically, We combine the features of the 'fusion' layer (after up-sampled via bilinear interpolation) , the 'res2b' layer and the 'res2c' layer. Second, we develop cascade nets, in which the second network utilize the output of the first network to generate more accurate parsing map. Our ResNet-101 was pre-trained on the standard 1.2M imagenet data and finetuned on ADE20K Dataset. |

| S-LAB-IIE-CAS | Ou Xinyu [1,2]

Ling Hefei [2] Liu Si [1] 1. Chinese Academy of Sciences, Institute of Information Engineering; 2. Huazhong University of Science and Technology (This work was done when the first author worked as an intern at S-Lab of CASIIE.) |

We exploit object-based contextual enhancement strategies to improve the performance of deep convolutional neural network over scene parsing task. Increasing the weights of objects on local proposal regions can enhance the structure characteristics of the object and correct the ambiguous areas which are wrongly judged as stuff. We have verified its effectiveness on ResNet101-like architecture [1], which is designed with multi-scale, CRF, atrous convolutional [2] technologies. We also apply various technologies (such as RPN [3], black hole padding, visual attention, iterative training) to this ResNet101-like architecture. The algorithm and architecture details will be described in our paper (available online shortly).

In this competition, we submit five entries. The first (model A) is a Multi-Scale Resnet101-like model with Fully Connected CRF and Atrous Convolutions, which achieved 0.3486 mIOU and 75.39% pixel-wise accuracy on validation dataset. The second model is a Multi-Scale deep CNN modified by object proposal, which achieved 0.3809 mIOU and 75.69% pixel-wise accuracy. A black hold restoration strategy is attached to model B to generate the model C. The model D attention strategies in deep CNN model. And the model E combined with the results of other four models. [1] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep Residual Learning for Image Recognition.IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016 [2] Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, Alan L. Yuille: DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. CoRR abs/1606.00915 (2016) [3] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. Conference on Neural Information Processing Systems (NIPS), 2015 |

| NUS_FCRN | Li Xin, Tsinghua University;

Jin xiaojie, National University of Singapore; Jiashi Feng, National University of Singapore. |

We trained a single fully convolutional neural network with ResNet-101 as frontend model.

We did not use any multiscale data augmentation in both training and testing. |

| NUS-AIPARSE | XIAOJIE JIN (NUS)

YUNPENG CHEN (NUS) XIN LI (NUS) JIASHI FENG (NUS) SHUICHENG YAN (360 AI INSTITUTE, NUS) |

The submissions are based on our proposed Multi-Path Feedback recurrent neural network (MPF-RNN) [1]. MPF-RNN aims to enhancing the capability of RNNs on modeling long-range context information at multiple levels and better distinguish pixels that are easy to confuse in pixel-wise classification. In contrast to CNNs without feedback and RNNs with only a single feedback path, MPF-RNN propagates the contextual features learned at top layers through weighted recurrent connections to multiple bottom layers to help them learn better features with such "hindsight". Besides, we propose a new training strategy which considers the loss accumulated at multiple recurrent steps to improve performance of the MPF-RNN on parsing small objects as well as stabilizing the training procedure.

In this contest, Res101 is used as baseline model. Multi-scale input data augmentation as well as multi-scale testing are used. [1] Jin, Xiaojie, Yunpeng Chen, Jiashi Feng, Zequn Jie, and Shuicheng Yan. "Multi-Path Feedback Recurrent Neural Network for Scene Parsing." arXiv preprint arXiv:1608.07706 (2016). |

| F205_CV | Cheng Zhou

Li Jiancheng Lin Zhihui Lin Zhiguan Yang Dali All came from Tsinghua university, Graduate School at ShenZhen Lab F205,China |

Our team has five student members from Tsinghua university, Graduate School at ShenZhen Lab F205,China. We have joined two sub-tasks of the ILSVRC2016 & COCO challenge which is the Scene Parsing and Object detection from video. We are the first time to attend this competition.

The two of the members have focus on the Scene Parsing, they mainly utilized several model fusion algorithms on some famous and effective CNN models like ResNet[1], FCN[2] and DilatedNet[3, 4] and used CRF to get more context features to improve the classification accuracy and mean IoU rate. Since the image size is large, the image is downsampled before feeding to the network. What's more, we used vertical mirror technique for data augmentation. The places2 scene classification 2016 pretrained model was used to fine-tune ResNet101 and FCN, while DilatedNet fine-tuned from the places2 scene parsing 2016 pretrained model[5]. Later fusion and CRF were added at last. For object detection from video, the biggest challenge is there are more than 2 millions images with very high resolution in total. We didn't think about using the fast-RCNN[6] like models to solve it. It need much more training and testing time. So we chose the ssd[7] which is an effective and efficient framework for object detection. We utilized the ResNet101 as the base model, but it is slower than VGGNet[8]. For testing it can achieve about 10FPS on single GTX TITAN X GPU. However, there are more than 700 thousands images in the test set. It costed lots of time. On tracking task, we have a dynamic adjustment algorithm, but it need a ResNet101 model for scoring the patch. It can just achieve about less than 1FPS. So we cannot do this work on test set. For the submission, we used a simple method to filter the noise proposals and track the object. References: [1] He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[J]. arXiv preprint arXiv:1512.03385, 2015. [2] Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2015: 3431-3440. [3] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. arXiv:1606.00915, 2016. [4] F. Yu and V. Koltun. Multi-scale context aggregation by dilated convolutions. In ICLR, 2016. [5] B. Zhou, H. Zhao, X. Puig, S. Fidler, A. Barriuso and A. Torralba. arXiv:1608.05442 [6] Girshick R. Fast r-cnn[C]//Proceedings of the IEEE International Conference on Computer Vision. 2015: 1440-1448. [8] Liu W, Anguelov D, Erhan D, et al. SSD: Single Shot MultiBox Detector[J]. arXiv preprint arXiv:1512.02325, 2015. [9] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition[J]. arXiv preprint arXiv:1409.1556, 2014. |

| Faceall-BUPT | Xuankun HUANG, BUPT, CHINA

Jiangqi ZHANG, BUPT, CHINA Zhiqun HE, BUPT, CHINA Junfei ZHUANG, BUPT, CHINA Zesang HUANG, BUPT, CHINA Yongqiang Yao, BUPT, CHINA Kun HU, BUPT, CHINA Fengye XIONG, BUPT, CHINA Hongliang BAI, Beijing Faceall co., LTD Wenjian FENG, Beijing Faceall co., LTD Yuan DONG, BUPT, CHINA |

# Classification/Localization

We trained the ResNet-101, ResNet-152 and Inception-v3 for object classification. Multi-view testing and models ensemble is utilized to generate the final classification results. For localization task, we trained a Region Proposal Network to generate proposals of each image, and we fine-tuned two models with object-level annotations of 1,000 classes. Moreover, a background class is added into the network. Then test images are segmented into 300 regions by RPN and these regions are classified by the fine-tuned model into one of 1,001 classes. And the final bounding box is generated by merging the bounding rectangle of three regions. # Object detection We utilize faster-rcnn with the publicly available resnet-101. Other than the baseline, we adopt multi-scale roi to obtain features containing richer context information. For testing, we use 3 scales and merge these results using the simple strategy introduced last year. No validation data is used for training, and flipped images are used in only a third of the training epochs. # Object detection from video We use Faster R-CNN with Resnet-101 to do this as in the object detection task. One fifth of the images are tested with 2 scales. No tracking techniques are used because of some mishaps. # Scene classification We trained a single Inception-v3 network with multi-scale and tested with multi-view of 150 crops. On validation the top-5 error is about 14.56%. # Scene parsing We trained 6 models with net structure inspired by fcn8s and dilatedNet with 3 scales(256,384,512). Then we test with flipped images using pre-trained fcn8s and dilatedNet. The pixel-wise accuracy is 76.94% and mean of the class-wise IoU is 0.3552. |

| Multiscale-FCN-CRFRNN | Shuai Zheng, Oxford

Anurag Arnab, Oxford Philip Torr, Oxford |

This submission is trained based on Conditional Random Fields as Recurrent Neural Networks, (described in Zheng et al., ICCV 2015), with multi-scale training pipeline. Our base model is built on ResNet101, which is only pre-trained on ImageNet. After that, the model is built within a Fully Convolutional Network (FCN) structure and only fine-tuned on MIT Scene Parsing dataset. This is done using a multi-scale training pipeline, similar to Farabet et al. 2013. In the end, this FCN-ResNet101 model is plugged in with CRF-RNN and trained in an end-to-end pipeline. |

| Deep Cognition Labs | Mandeep Kumar, Deep Cognition Labs

Krishna Kishore, Deep Cognition Labs Rajendra Singh, Deep Cognition Labs |

We present these results for scene parsing task that are aquired using a modified Deeplab vgg16 network along with CRF. |

| mmap-o | Qi Zheng, Wuhan University

Cheng Tong, Wuhan University Xiang Li, Wuhan University |

We use the FULLY CONVOLUTIONAL NETWORKS [1] with VGG 16-layer net to parsing the scene images. The model is adopted with 8 pixel stride nets.

Initial results contain some labels irrelevant to the scene. Some high confidence labels are exploited to group the images into different scenes to remove irrelevant labels. Here we use data-driven classification strategy to refine the results. [1] Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation[C]// IEEE Conference on Computer Vision and Pattern Recognition. 2015:1337-1342. |

| NuistParsing | Feng Wang:B-DAT Lab, Nanjing University of Information Science and Technology, China

Zhi Li:B-DAT Lab, Nanjing University of Information Science and Technology, China Qingshan Liu:B-DAT Lab, Nanjing University of Information Science and Technology, China |

Scene parsing problem is extremely challenging due to the diversity of appearance and the complexity of configuration,laying, and occasion. We mainly adopt SegNet architecture for scene parsing work. We first extract the edge information of images from ground truth and take the edge as a new class. Then we re-compute the weights of all classes to overcome the imbalance between classes. We use the new ground truth and new weights to train the model. In addition, we employ super-pixel smoothing to optimize the results.

[1] V. Badrinarayanan, A. Handa, and R. Cipolla. SegNet:a deep convolutional encoder-decoder architecture for robust semantic pixel-wise labelling. arXiv preprint arXiv:1505.07293,2015. [2]Wang F, Li Z, Liu Q. Coarse-to-fine human parsing with Fast R-CNN and over-segment retrieval[C]//2016 IEEE International Conference on Image Processing (ICIP). IEEE, 2016: 1938-1942. |

| XKA | Zengming Shen,Yifei Liu, Lengyue Chen,Honghui Shi, Thomas Huang

University of Illinois at Urbana-Champaign |

SgeNet is trained only on ADE20k dataset and post processed with CRF.

1.SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation Vijay Badrinarayanan, Alex Kendall and Roberto Cipolla 2.Efficient Inference in Fully Connected CRFs with Gaussian Edge Potentials, Philipp Krähenbühl and Vladlen Koltun, NIPS 2011 |

| VikyNet | K.Vikraman , Independent researcher. Graduate from IIT Roorkee | Semantic Segmentation requires careful adjustment of parameters. Due to max pooling, a lot of useful information about edges are lost. A lot of algorithms like Deconvolutional networks try to capture them but at the cost of increased computational time.

FCNs have a lot of advantages over them in terms of processing time. ParseNet has an increased perfomance. I have fine-tuned the model to perform better. References: 1)ParseNet: Looking Wider to See Better 2)Fully Convolutional Networks for Semantic Segmentation |