Overview

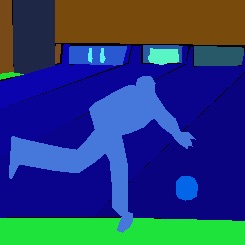

Scene parsing is to segment and parse an image into different image regions associated with semantic categories, such as sky, road, person, and bed. MIT Scene Parsing Benchmark (SceneParse150) provides a standard training and evaluation platform for the algorithms of scene parsing. The data for this benchmark comes from ADE20K Dataset which contains more than 20K scene-centric images exhaustively

annotated with objects and object parts. Specifically, the benchmark is divided into 20K images for training, 2K images for validation, and another batch of held-out images for testing. There are totally 150 semantic categories included for evaluation, which include stuffs like sky, road, grass, and discrete objects like person, car, bed. Note that there are non-uniform distribution of objects occuring in the images, mimicking a more natural object occurrence in daily scene.

For each image, segmentation algorithms will produce a semantic segmentation mask, predicting the semantic category for each pixel in the image. The performance of the algorithms will be evaluated on the mean of pixel-wise accuracy and the Intersection over Union (IoU) averaged over all the 150 semantic categories.

The data in the benchmark has been used in the Scene Parsing Challenge 2016 held jointly with ILSVRC'16, and Places Challenge 2017 held jointly with COCO Challenge. Demo of scene parsing is available. The pre-trained models and demo code of scene parsing are released.